-

Kenya's economy faces climate change risks: World Bank

Kenya's economy faces climate change risks: World Bank

-

Fela Kuti: first African to get Grammys Lifetime Achievement Award

-

Cubans queue for fuel as Trump issues oil ultimatum

Cubans queue for fuel as Trump issues oil ultimatum

-

France rescues over 6,000 UK-bound Channel migrants in 2025

-

Analysts say Kevin Warsh a safe choice for US Fed chair

Analysts say Kevin Warsh a safe choice for US Fed chair

-

Fela Kuti to be first African to get Grammys Lifetime Achievement Award

-

Gold, silver prices tumble as investors soothed by Trump's Fed pick

Gold, silver prices tumble as investors soothed by Trump's Fed pick

-

Social media fuels surge in UK men seeking testosterone jabs

-

Trump nominates former US Fed official as next central bank chief

Trump nominates former US Fed official as next central bank chief

-

Chad, France eye economic cooperation as they reset strained ties

-

Artist chains up thrashing robot dog to expose AI fears

Artist chains up thrashing robot dog to expose AI fears

-

Dutch watchdog launches Roblox probe over 'risks to children'

-

Cuddly Olympics mascot facing life or death struggle in the wild

Cuddly Olympics mascot facing life or death struggle in the wild

-

UK schoolgirl game character Amelia co-opted by far-right

-

Panama court annuls Hong Kong firm's canal port concession

Panama court annuls Hong Kong firm's canal port concession

-

Asian stocks hit by fresh tech fears as gold retreats from peak

-

Apple earnings soar as China iPhone sales surge

Apple earnings soar as China iPhone sales surge

-

With Trump administration watching, Canada oil hub faces separatist bid

-

What are the key challenges awaiting the new US Fed chair?

What are the key challenges awaiting the new US Fed chair?

-

Moscow records heaviest snowfall in over 200 years

-

Polar bears bulk up despite melting Norwegian Arctic: study

Polar bears bulk up despite melting Norwegian Arctic: study

-

Waymo gears up to launch robotaxis in London this year

-

French IT group Capgemini under fire over ICE links

French IT group Capgemini under fire over ICE links

-

Czechs wind up black coal mining in green energy switch

-

EU eyes migration clampdown with push on deportations, visas

EU eyes migration clampdown with push on deportations, visas

-

Northern Mozambique: massive gas potential in an insurgency zone

-

Gold demand hits record high on Trump policy doubts: industry

Gold demand hits record high on Trump policy doubts: industry

-

UK drugs giant AstraZeneca announces $15 bn investment in China

-

Ghana moves to rewrite mining laws for bigger share of gold revenues

Ghana moves to rewrite mining laws for bigger share of gold revenues

-

Russia's sanctioned oil firm Lukoil to sell foreign assets to Carlyle

-

Gold soars towards $5,600 as Trump rattles sabre over Iran

Gold soars towards $5,600 as Trump rattles sabre over Iran

-

Deutsche Bank logs record profits, as new probe casts shadow

-

Vietnam and EU upgrade ties as EU chief visits Hanoi

Vietnam and EU upgrade ties as EU chief visits Hanoi

-

Hongkongers snap up silver as gold becomes 'too expensive'

-

Gold soars past $5,500 as Trump sabre rattles over Iran

Gold soars past $5,500 as Trump sabre rattles over Iran

-

Samsung logs best-ever profit on AI chip demand

-

China's ambassador warns Australia on buyback of key port

China's ambassador warns Australia on buyback of key port

-

As US tensions churn, new generation of protest singers meet the moment

-

Venezuelans eye economic revival with hoped-for oil resurgence

Venezuelans eye economic revival with hoped-for oil resurgence

-

Samsung Electronics posts record profit on AI demand

-

Formerra to Supply Foster Medical Compounds in Europe

Formerra to Supply Foster Medical Compounds in Europe

-

French Senate adopts bill to return colonial-era art

-

Tesla profits tumble on lower EV sales, AI spending surge

Tesla profits tumble on lower EV sales, AI spending surge

-

Meta shares jump on strong earnings report

-

Anti-immigration protesters force climbdown in Sundance documentary

Anti-immigration protesters force climbdown in Sundance documentary

-

Springsteen releases fiery ode to Minneapolis shooting victims

-

SpaceX eyes IPO timed to planet alignment and Musk birthday: report

SpaceX eyes IPO timed to planet alignment and Musk birthday: report

-

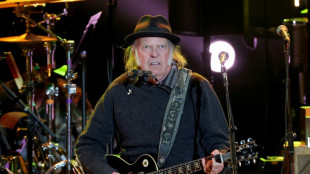

Neil Young gifts music to Greenland residents for stress relief

-

Fear in Sicilian town as vast landslide risks widening

Fear in Sicilian town as vast landslide risks widening

-

King Charles III warns world 'going backwards' in climate fight

AI's blind spot: tools fail to detect their own fakes

When outraged Filipinos turned to an AI-powered chatbot to verify a viral photograph of a lawmaker embroiled in a corruption scandal, the tool failed to detect it was fabricated -- even though it had generated the image itself.

Internet users are increasingly turning to chatbots to verify images in real time, but the tools often fail, raising questions about their visual debunking capabilities at a time when major tech platforms are scaling back human fact-checking.

In many cases, the tools wrongly identify images as real even when they are generated using the same generative models, further muddying an online information landscape awash with AI-generated fakes.

Among them is a fabricated image circulating on social media of Elizaldy Co, a former Philippine lawmaker charged by prosecutors in a multibillion-dollar flood-control corruption scam that sparked massive protests in the disaster-prone country.

The image of Co, whose whereabouts has been unknown since the official probe began, appeared to show him in Portugal.

When online sleuths tracking him asked Google's new AI mode whether the image was real, it incorrectly said it was authentic.

AFP's fact-checkers tracked down its creator and determined that the image was generated using Google AI.

"These models are trained primarily on language patterns and lack the specialized visual understanding needed to accurately identify AI-generated or manipulated imagery," Alon Yamin, chief executive of AI content detection platform Copyleaks, told AFP.

"With AI chatbots, even when an image originates from a similar generative model, the chatbot often provides inconsistent or overly generalized assessments, making them unreliable for tasks like fact-checking or verifying authenticity."

Google did not respond to AFP’s request for comment.

- 'Distinguishable from reality' -

AFP found similar examples of AI tools failing to verify their own creations.

During last month's deadly protests over lucrative benefits for senior officials in Pakistan-administered Kashmir, social media users shared a fabricated image purportedly showing men marching with flags and torches.

An AFP analysis found it was created using Google's Gemini AI model.

But Gemini and Microsoft's Copilot falsely identified it as a genuine image of the protest.

"This inability to correctly identify AI images stems from the fact that they (AI models) are programmed only to mimic well," Rossine Fallorina, from the nonprofit Sigla Research Center, told AFP.

"In a sense, they can only generate things to resemble. They cannot ascertain whether the resemblance is actually distinguishable from reality."

Earlier this year, Columbia University's Tow Center for Digital Journalism tested the ability of seven AI chatbots -- including ChatGPT, Perplexity, Grok, and Gemini -- to verify 10 images from photojournalists of news events.

All seven models failed to correctly identify the provenance of the photos, the study said.

- 'Shocked' -

AFP tracked down the source of Co's photo that garnered over a million views across social media -- a middle-aged web developer in the Philippines, who said he created it "for fun" using Nano Banana, Gemini's AI image generator.

"Sadly, a lot of people believed it," he told AFP, requesting anonymity to avoid a backlash.

"I edited my post -- and added 'AI generated' to stop the spread -- because I was shocked at how many shares it got."

Such cases show how AI-generated photos flooding social platforms can look virtually identical to real imagery.

The trend has fueled concerns as surveys show online users are increasingly shifting from traditional search engines to AI tools for information gathering and verifying information.

The shift comes as Meta announced earlier this year it was ending its third-party fact-checking program in the United States, turning over the task of debunking falsehoods to ordinary users under a model known as "Community Notes."

Human fact-checking has long been a flashpoint in hyperpolarized societies, where conservative advocates accuse professional fact-checkers of liberal bias, a charge they reject.

AFP currently works in 26 languages with Meta's fact-checking program, including in Asia, Latin America, and the European Union.

Researchers say AI models can be useful to professional fact-checkers, helping to quickly geolocate images and spot visual clues to establish authenticity. But they caution that they cannot replace the work of trained human fact-checkers.

"We can't rely on AI tools to combat AI in the long run," Fallorina said.

burs-ac/sla/sms

St.Ch.Baker--CPN